AI design for children: Navigating the global landscape of ethical regulation

With so many ethical AI frameworks and regulations emerging across the globe, how can designers and innovators know what matters, what’s enforceable, and where to begin?

If you’re involved in designing, building, or leading AI-powered products and services for children, you’re probably feeling growing pressure to act responsibly. However, the global AI governance landscape can feel fragmented, vast and overwhelming. With policies, regulations, and ethical frameworks emerging rapidly across different countries, it’s hard to know what really matters, what you need to comply with, and how to stay ahead without slowing innovation.

This article breaks down the key global guidance - including the newly launched Children & AI Design Code - to help you quickly understand what’s relevant, so you can build ethical AI that not only keeps children safe, but preserves trust with families, and drives positive impact.

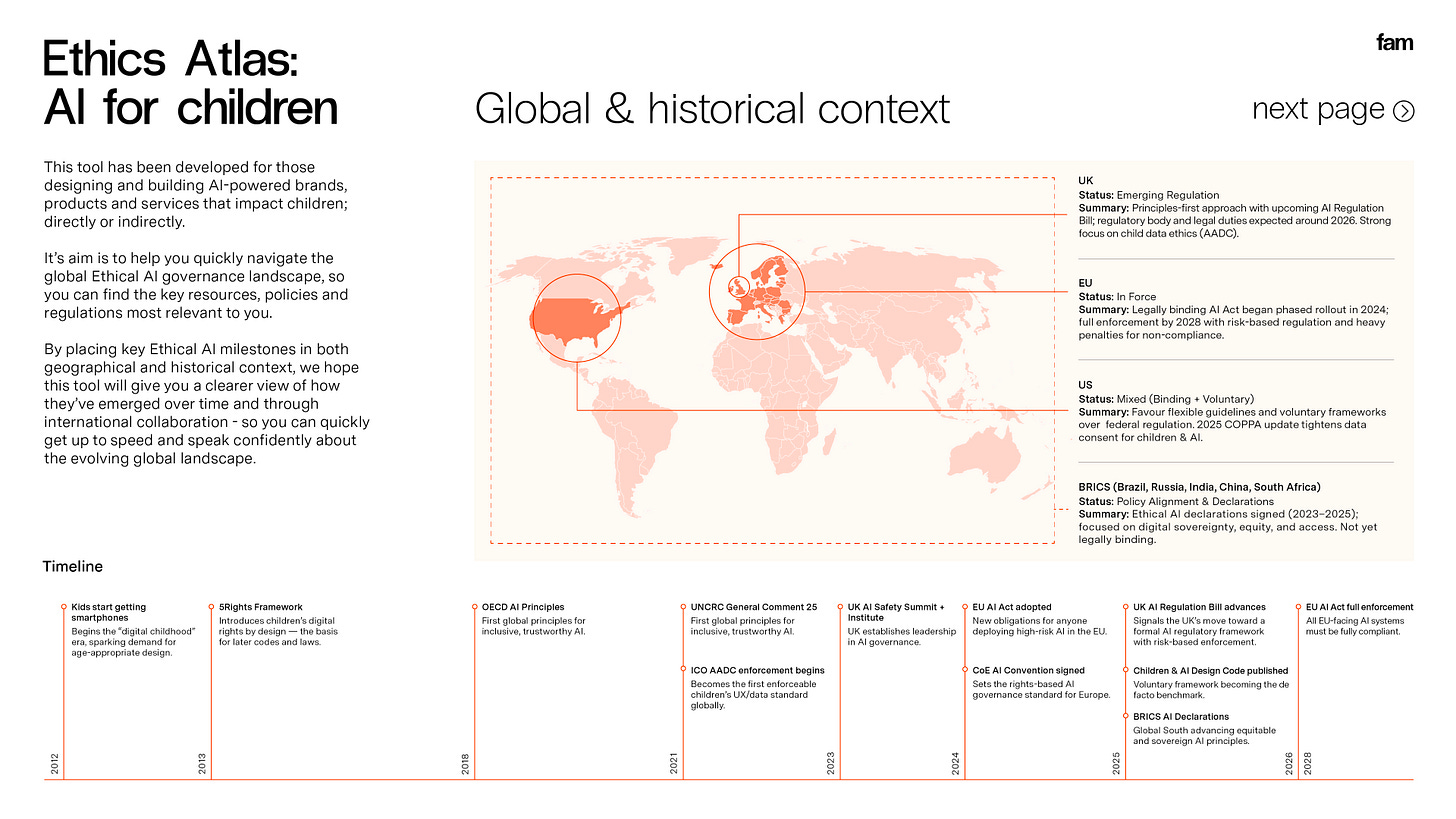

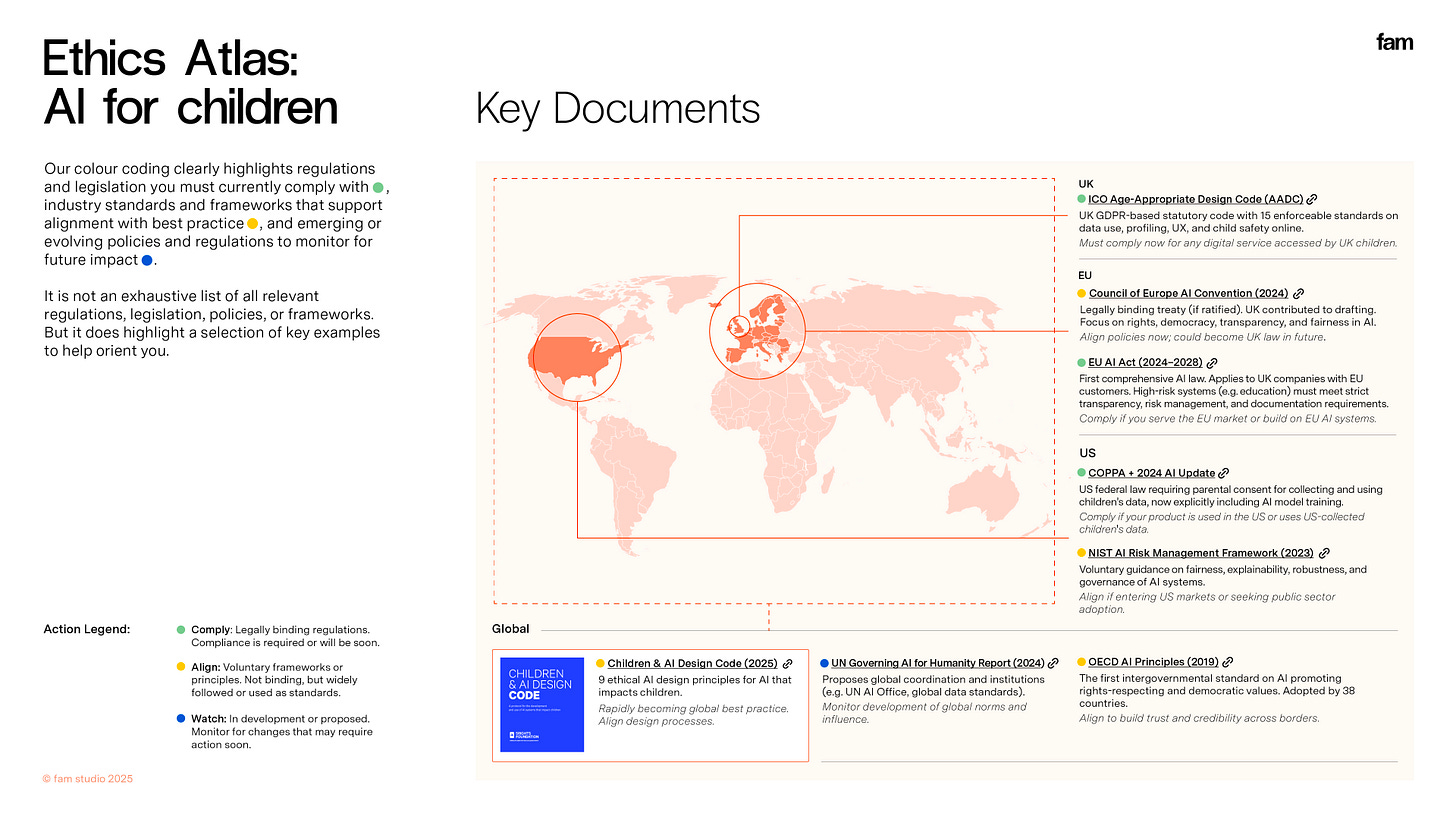

We’ve even developed a simple visual tool - the Ethics Atlas - to help you identify what’s most relevant to your organisation at a glance.

2025: A defining year for ethical AI & children

This year saw the launch of the Children & AI Design Code, developed by the 5Rights Foundation. It is the most comprehensive framework yet for designing AI in ways that prioritise and protect children’s rights throughout the lifecycle.

The Code is not just another set of guidelines - it is the culmination of more than a decade of global thinking, policy development, and advocacy around children's rights in digital environments. Drawing on foundations such as the 5Rights Framework, the UNCRC’s General Comment No. 25, and the UK’s Age Appropriate Design Code, it translates high-level principles into clear, actionable criteria for developers, designers, and decision-makers. Though not a legal instrument, the Code is already shaping best practices and influencing emerging regulatory frameworks around the world.

Importantly, the Code didn’t emerge in isolation. It reflects and reinforces a much wider international effort to bring coherence and accountability to AI governance. Understanding the journey behind the Code - and the global forces it aligns with - helps clarify not only why it matters, but also why it’s gaining traction now.

On the shoulders of giants: How the code emerged from a decade of global collaboration

The global conversation around ethical AI has transformed dramatically over the past decade - from abstract principles to structured governance, from voluntary declarations to binding laws. What began as a series of calls for rights and responsibility in the digital realm has matured into a complex, multilayered ecosystem of policies, frameworks, and regulatory instruments, many of which are now shaping how AI is designed, developed, and deployed - especially for children.

This evolution has been marked by unprecedented international collaboration. The early influence of the 5Rights Framework, rooted in the UN Convention on the Rights of the Child, helped ignite a global movement to embed child rights into digital design. As ethical concerns around AI grew, nations and organisations converged - through the OECD’s adoption of human-centric AI principles, the EU’s sweeping AI Act, and the Council of Europe Framework Convention on AI (the first international legally binding treaty on AI grounded in human rights).

What’s emerged is a tapestry of approaches, each shaped by differing political priorities and cultural attitudes toward regulation. The European Union has taken the boldest regulatory stance - introducing binding legislation that classifies AI systems by risk, imposes stringent requirements on high-impact use cases like education, and insists on transparency, accountability, and human oversight. The UK, while not far behind, has opted for a “principles-first” model, signalling intent to regulate but currently favouring guidance over enforcement. The UK’s AI Regulation Bill has recently passed though the House of Lords, and so this is a key area of regulation to watch closely for any UK innovators.

In contrast, the United States has taken a softer route - emphasising innovation and market growth, with a mix of voluntary frameworks, executive policy, and sector-specific laws like COPPA. The NIST AI Risk Management Framework, for example, is entirely voluntary. This approach aims to cultivate leadership in AI development while gradually shaping ethical norms through non-binding guidance and institutional incentives. State-specific laws tend to focus on children’s privacy and data in relation to AI, complementing COPPA’s focus on responsible data handling and privacy in AI systems affecting children.

Meanwhile, the BRICS nations are advancing a distinctly Global South perspective - prioritising sovereign development, ethical access, and inclusive innovation. Their recent declarations highlight a growing commitment to equity, child protection, and ethical literacy as part of AI’s role in social infrastructure.

Amidst this diverse and shifting terrain, many innovators - especially those working on products for children - feel the pressure to act ethically, but struggle to orient themselves.

The Ethics Atlas: A tool to navigate the complex global landscape of AI governance

To support innovators navigating this complex terrain, we’ve developed the Ethics Atlas - a resource designed to bring coherence to the global, fragmented regulatory environment.

The tool brings together over a decade of global policy and advocacy work, visualising where regulation stands across different regions and how it has developed over time.

It offers a global map view with summaries of each territory’s approach to ethical AI and children, including direct links to key documents, laws, and declarations. You can explore what’s in force, what’s emerging, and what’s influential but voluntary - helping you quickly understand what’s most relevant for your organisation. We’ve placed an emphasis on the Children & AI Design Code, as a key framework for designing and developing AI systems with children's safety, rights, and well-being in mind

Crucially, the Ethics Atlas distinguishes between what you need to comply with, what’s worth aligning with, and what to keep an eye on as future regulation develops. In a space where clarity is rare, it’s a way to see where you stand - and what’s coming next.

The ATLAS approach: First steps toward ethical AI for children

The Ethics Atlas isn’t just a map - it’s also a method to help you navigate the ethical AI landscape for children and families:

A - Aware: Start by becoming aware of what’s already out there. Use the Ethics Atlas to map existing laws, regulations, and voluntary frameworks relevant to your product or service - especially if you operate across borders.

T - Trust: Trust is becoming a key differentiator. Aligning early with respected voluntary frameworks - like the Children & AI Design Code or OECD AI Principles - can help build credibility with families, regulators, and procurement partners.

L - Landscape: Understand the broader landscape. Track where regulation is heading, not just where it is today. The Ethics Atlas highlights emerging laws and treaties to watch, so you can anticipate change rather than react to it.

A - Action: Translate insights into concrete action. Identify what you need to do now (e.g., compliance), where you should align (e.g., design codes), and what future changes to prepare for. Use this to inform product, design, and risk strategies.

S - Systematise: Finally, embed what you’ve learned. Ethical design isn’t a one-off decision, it’s a cultural shift. Equip your teams with shared principles, routines, and training to ensure ethics is built in, not bolted on.

The long view

Ultimately, the question of how we design AI for children isn’t just technical or regulatory - it’s generational. The systems we build today will shape the environments children grow up in tomorrow: how they learn, play, connect, and imagine what’s possible. That demands more than compliance. It calls for intention, care, and a commitment to ensuring that innovation supports children to flourish. If we get this right, we don’t just build safer products - we help shape a future that is more just, more inclusive, and more human.

Interested in AI design for children?

We’re working with the University of Oxford to develop an Ethical AI Design for Children training programme. If you’re interested in hearing more get in touch below.